Introduction: Architecture Is a Long-Term Bet

In 2026, many engineering teams still wrestle with the same architectural dilemma: monolith or microservices. The debate is measured in delivery speed, cost, and operational friction.

Consider the outcomes.

- Amazon Prime Video famously consolidated a microservices-based monitoring system back into a monolithic design, cutting infrastructure costs by over 90%.

- Netflix, by contrast, leaned fully into microservices back in 2009, enabling global rollouts and high-velocity deployments — but only after their monolith couldn’t sustain availability demands.

- Etsy initially thrived on a monolithic foundation, then evolved toward service boundaries as team autonomy and feature velocity became bottlenecks.

- And Segment moved from 50+ services back to a monolith, citing debugging pain and deployment overhead.

The point: there’s no universal “right” answer. This post will unpack where monoliths still shine, where microservices genuinely pay off, and how to evolve without stalling, overspending, or rolling back.

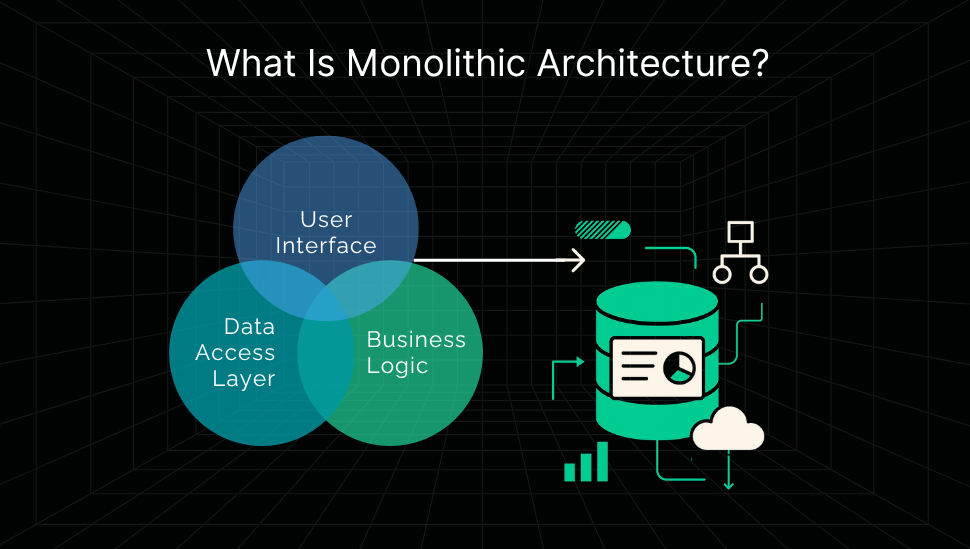

What Is Monolithic Architecture?

A monolithic architecture is a single, unified codebase where all components (UI, business logic, and data access) live together. It’s deployed as one unit. Think of it as one big block of software.

Why it Works

- Simplicity: Easy to develop, test, and deploy — especially for small teams.

- Performance: No inter-service network latency.

- Unified Data: Strong consistency through a single shared database.

Where it Breaks Down

- Scalability: You have to scale the whole application, not just the bottleneck.

- Deployment Risk: One small change means a full redeploy.

- Stack Lock-In: Hard to adopt new tech for parts of the system.

Why this matters: Monoliths work best when speed and simplicity matter more than long-term flexibility. Many successful products start here, and some never need to leave.

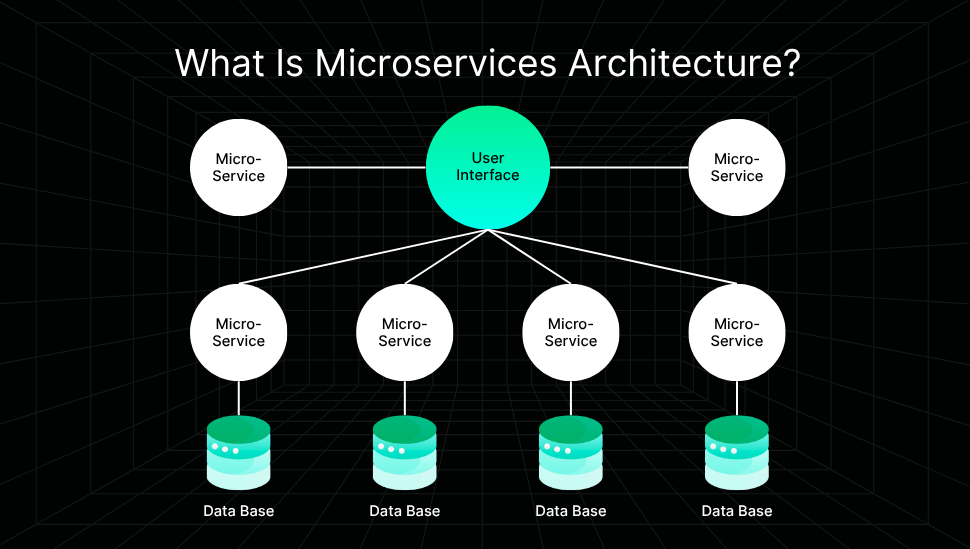

What Is Microservices Architecture?

Microservices architecture splits an application into small, independently deployable services, each handling a specific business function and owning its own data. These services communicate over APIs or message queues.

Why it Works

- Autonomy: Teams can work, deploy, and scale services independently.

- Flexibility: Choose the best-fit language, framework, or infra per service.

- Resilience: Failures are isolated, not system-wide.

Where it Breaks Down

- Complexity: More moving parts, more orchestration.

- Data Consistency: Managing state across services is hard.

- Ops Overhead: Requires mature DevOps, CI/CD, and monitoring.

Why this matters: Microservices enable scale, speed, and modularity, but only when your team and processes are ready to support the extra complexity.

Microservices vs Monolith: A Clear Comparison

Choosing between architectures means trading off simplicity, flexibility, and scalability. Here’s how they compare across the dimensions that matter:

| Criteria | Monolithic Architecture | Microservices Architecture |

| Deployment | One unified deployment – a single artifact and pipeline | Each service is deployed independently, often via separate pipelines |

| Scalability | Vertical or coarse-grained scaling of the entire app | Fine-grained horizontal scaling per service/component |

| Fault Isolation | A bug or failure in one module can crash the entire system | Failures are contained within individual services |

| Tech Flexibility | One language/framework across the entire stack | Teams can choose best-fit tech for each service |

| Performance | Faster internally (in-memory calls, no network latency) | Slightly slower (remote calls, network overhead) |

| Data Consistency | Centralized database enables strong consistency (ACID) | Distributed databases lead to eventual consistency or compensation |

| Team Autonomy | Central ownership and decision-making | Teams own and operate services end-to-end |

| DevOps Overhead | Simpler CI/CD, monitoring, and release processes | Requires advanced tooling for CI/CD, observability, and automation |

| Codebase Complexity | Single, cohesive codebase – easier to search and debug | Multiple codebases – harder to track changes across services |

| Evolution Speed | Changes impact the entire system – high coordination cost | Faster iteration within services – more flexible at scale |

Why this matters: Monoliths simplify execution when the product is early, the team is small, and change is centralized. Microservices enable speed and safety when moving fast at scale, but they require the ecosystem and discipline to support it.

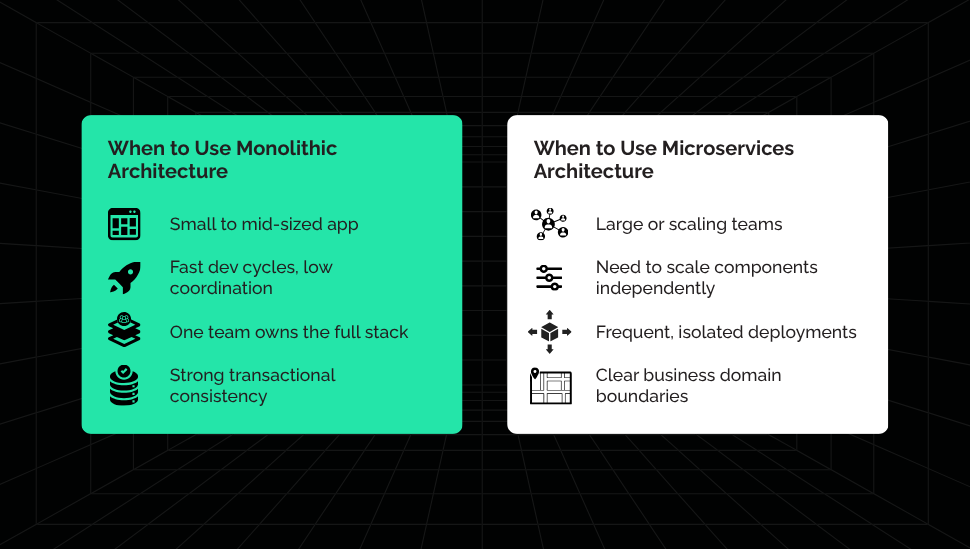

When to Use Monolithic Architecture

Monoliths aren’t legacy by default. In many cases, they’re the smartest starting point, especially when speed, cohesion, and simplicity are top priorities.

Use a monolith when:

- You’re building a small to mid-sized app that doesn’t require massive scale (yet).

- You want fast development cycles with minimal coordination overhead.

- You have a tight-knit team that can manage the full stack.

- Transactional integrity is critical and easier to enforce in a single system.

Why this matters: Starting with a monolith doesn’t limit you; it often speeds you up.

Many engineering leaders (including Martin Fowler, who popularized the MonolithFirst principle way back in 2015) argue that early-stage teams benefit more from cohesion and simplicity than premature modularity.

A well-structured monolith only needs to evolve into microservices when (and if) the need arises.

When to Use Microservices Architecture

Microservices shine when complexity becomes a limiting factor. If scaling teams, scaling features, or scaling infrastructure is slowing you down, it might be time to modularize.

Use microservices when:

- You have large or growing teams that need autonomy.

- The app needs to scale specific parts independently (e.g., payments, search).

- You’re releasing features frequently and want independent deploy cycles.

- Business domains are well understood and can be isolated cleanly.

Why this matters: Microservices are for organizational scale. But they demand process maturity, not just good architecture.

Migrating: Monolith to Microservices

Breaking down a monolith means solving a real bottleneck without destabilizing the system. The right way to migrate is step-by-step, with clear visibility, controlled rollout, and verifiable outcomes.

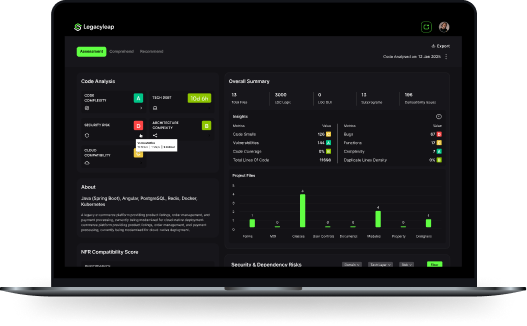

1. Assess Readiness

Start by measuring whether your team and system are even ready for microservices. At Legacyleap, we quantify this through a Readiness Score that blends system complexity, dependency density, and DevOps maturity. A tightly coupled VB6 system with no CI/CD pipelines will score very differently from a modular .NET Framework app with active containerization.

Our use of Gen AI accelerates this step by scanning the codebase and surfacing technical debt patterns far faster than manual reviews.

In a modernization for a global insurance leader, readiness analysis of their VB6 system revealed which modules were viable to decouple first. Combined with AI-assisted automation, this helped cut development time by 50% while reducing the risk of destabilizing business-critical workflows.

2. Map Dependencies and Boundaries

Next, you need to understand how the code actually behaves. This involves building a dependency graph that shows which modules talk to which, where data crosses boundaries, and where bottlenecks live.

With Legacyleap, this step is compressed from months to weeks, automating this step by parsing millions of lines of code and surfacing natural service boundaries.

Action matters here: you’ve mapped dependencies, traced business flows, and highlighted hotspots. Without this step, you risk splitting blindly and creating a distributed monolith.

In a semiconductor modernization, dependency mapping uncovered hidden couplings in ColdFusion apps that would have made a straight replatform risky. By isolating those early, the team accelerated migration by 60% without introducing instability.

3. Extract the First Service

Now, refactor one candidate module into an independent microservice. Start small (billing, order management, reporting), something with clear inputs and outputs.

With Legacyleap, this refactoring is accelerated by AI-generated service skeletons and auto-translated logic, validated against the original monolith for functional parity. Teams typically see a 50–70% reduction in manual effort at this stage.

In a transport tech project, the first candidate was an order management module inside a sprawling EJB3 system. Refactoring it into a Spring Boot microservice immediately cut feature delivery cycles by 50%, while keeping the rest of the platform intact.

4. Deploy Safely

Once refactored, the new service should not be unleashed all at once. Use canary releases or blue-green deployments to gradually shift traffic.

Legacyleap generates the deployment artifacts (Dockerfiles, Helm charts, Terraform scripts), so you can integrate with Kubernetes or your existing cloud pipeline.

Here, you’ve rolled out in stages, monitored real traffic, and kept rollback ready in case anything went wrong.

5. Monitor and Iterate

Migration doesn’t end at deployment. Distributed systems introduce new challenges: data consistency, network latency, and inter-service failures.

This is why observability – tracing, logging, metrics – is non-negotiable. Legacyleap bakes these safety nets into the modernization process by generating regression tests and enabling parity checks between old and new flows.

In a fuel distribution software modernization, Legacyleap auto-generated regression suites and parity checks that caught data duplication issues early. The outcome: 70% automation, 80% less manual effort, and, most importantly, zero downtime during rollout across a live customer base.

* * *

Key takeaway: Migration is not a single leap but a staged evolution involving assessment, mapping, extraction, deployment, monitoring, and repeating. With the right platform and process, you can minimize risk, maximize learning, and scale your system safely without falling into the traps of premature splitting or uncontrolled service sprawl.

If you’d like to explore how Gen AI fits into the modernization workflow, also read: How Can Gen AI Drive Every Step of Your Modernization Journey?

Migration in Practice: Industry Perspectives

Architecture decisions don’t happen in a vacuum. The right balance between monolith and microservices depends heavily on the industry’s regulatory, operational, and scalability demands.

Here’s how the trade-offs play out in three of the most common sectors we work with:

Healthcare & HealthTech: Compliance-First Modernization

In healthcare, architecture choices are dictated by regulation as much as scale. HIPAA compliance, audit trails, and patient data segregation make consistency non-negotiable. Monoliths simplify this, but they slow down modular releases for claims, analytics, or patient portals.

A hybrid model works best: keep sensitive data modules monolithic for integrity while moving patient-facing workloads into microservices.

Legacyleap’s AI accelerates this by mapping PHI flows, auto-generating audit logs, and validating parity through regression tests, cutting compliance review cycles by up to 60%.

BFSI: Transaction Integrity at Scale

Banking, financial services, and insurance demand transactional accuracy, fraud detection, and regulatory compliance (PCI DSS, KYC). Monoliths enforce ACID guarantees naturally, but they hinder the agility needed for fraud engines or new payment services. Microservices offer flexibility, but without discipline, they weaken integrity across transactions.

A service-first microservices approach is most effective. Legacyleap’s AI maps dependencies to keep core ledger services intact while decoupling modules like fraud detection. Auto-generated test suites simulate thousands of transaction flows, reducing manual validation by up to 70% and ensuring compliance without slowing delivery.

Manufacturing: Low-Latency IoT Workloads

Manufacturing systems process IoT events in real time: machine telemetry, plant coordination, supplier updates. Monoliths buckle under this load, while microservices handle distributed event streaming more effectively. The challenge is synchronizing across environments without adding downtime risk.

A microservices-first architecture works best here, with AI-powered observability to maintain system-wide consistency. Legacyleap auto-generates service skeletons for event processing and flags anomalies during rollout, lowering downtime risk close to zero while ensuring predictable scaling of IoT workloads.

Solving for Data Consistency in Microservices

Moving to microservices unlocks scalability, team autonomy, and modular deployment. But it also introduces some of the hardest architectural challenges, especially around data consistency, communication, and coordination.

Here’s how our CTO breaks it down based on real-world experience:

1. Distributed Data Ownership

Microservices decentralize data, which introduces fragmentation.

Solution: Use event-driven sync (e.g., Kafka) to reflect changes across services and maintain system-wide state.

2. Eventual vs. Strong Consistency

Not every use case tolerates lag.

Solution: Match consistency models to business needs. Use Transactional Outbox, CDC, or queue-based patterns to ensure alignment.

3. Data Duplication

Duplication is inevitable, but inconsistency doesn’t have to be.

Solution: Use change data capture and real-time event streams to sync copies across services.

4. Distributed Transactions

Coordinating across services is non-trivial.

Solution: Apply the Saga pattern or orchestration tools like Temporal to manage workflows and automate failure handling.

5. Latency in Communication

Network hops slow everything down.

Solution: Design for async wherever possible. Use messaging queues and lightweight protocols to minimize delays.

Key Takeaway: With the right patterns, reliable messaging, and architecture-aware tooling, you scale your systems safely.

Architecture Failure Patterns to Avoid

Moving to microservices requires discipline, or you can end up with architectures that are harder to manage than the monolith you started with. Below are the most common failure patterns we see and how to avoid them.

1. Strangled Monolith

- How it happens: Teams chip away at a monolith for years, extracting service after service without a clear roadmap. The result is neither a clean monolith nor a true microservices ecosystem.

- Why it’s harmful: It creates coordination drag, endless redeploy fatigue, and mounting technical debt.

- How to prevent it: Define a migration strategy up front. Use readiness scoring and service boundary mapping to prioritize modules and set realistic milestones.

2. Zombie Hybrid

- How it happens: Services are split out, but the database remains shared. Every “independent” service still leans on the same schema.

- Why it’s harmful: Instead of autonomy, you get brittle coupling, schema lock-in, and ripple-effect failures.

- How to prevent it: Enforce data ownership per service. Use AI-driven dependency mapping to surface cross-service database calls early and refactor before rollout.

3. Fractured Mesh

- How it happens: Teams over-engineer service-to-service interactions. Instead of clean APIs, you end up with a web of direct dependencies.

- Why it’s harmful: Debugging becomes a nightmare. Latency and cascading failures creep in as the number of services grows.

- How to prevent it: Decouple with clear contracts. Use queues or async messaging for non-critical paths. Bake in observability so that inter-service communication is transparent.

4. Event Storm

- How it happens: In pursuit of scalability, teams jump headfirst into event-driven design, wiring everything through streams and brokers.

- Why it’s harmful: For simple CRUD or reporting apps, it adds complexity, increases latency, and burdens teams with operational overhead.

- How to prevent it: Let the architecture fit the problem. Use events where they unlock real value (high-volume telemetry, IoT coordination) but don’t force them where a request-response works better.

Other Pitfalls to Watch For

Alongside these patterns, a few other traps often appear:

- Premature splitting: Breaking apart systems too early leads to service sprawl with no real autonomy.

- Poor service boundaries: Without clear domain-driven design, services step on each other, duplicating data and logic.

- Lack of observability: Without tracing and metrics, debugging distributed systems turns into guesswork.

- Undisciplined CI/CD: Microservices demand automation; manual pipelines cause chaos at scale.

- Overengineering: Don’t build Kafka pipelines and retries for a CRUD app. Match solution complexity to the actual workload.

Microservices are not a badge of maturity. Avoiding these failure patterns comes down to clarity of scope, disciplined execution, and the right balance between automation and oversight.

Real-World Lessons: Switching In, Switching Back

Big tech made microservices famous, but not every company that adopted them stuck with them. These stories show both sides of the evolution.

Who Switched to Microservices

- Amazon: As Amazon scaled, its monolithic architecture began to bottleneck innovation. Shifting to microservices allowed teams to build, deploy, and scale independently. The trade-off? Increased orchestration and monitoring complexity, but it paid off at their scale.

- Netflix: In 2009, Netflix moved from a monolith to microservices to address uptime and delivery constraints. With this shift, they enabled global feature rollout, independent team velocity, and rapid iteration, all supported by a robust DevOps culture.

Who Switched Back (or Paused)

- Segment: Segment split into 50+ microservices early, and paid the price in debugging pain and deployment friction. They eventually consolidated critical workflows back into a monolith, gaining simplicity and speed without losing modularity.

- Auth0: Initially enthusiastic, Auth0 later reverted parts of its architecture to a monolith. Why? Monitoring gaps, slow iteration cycles, and the operational burden of microservices outpaced the benefits. Their solution: a hybrid model tuned for control and velocity.

It’s important to remember that microservices aren’t the final form. Architecture is dynamic. The right decision is the one that supports your speed, scale, and sanity today, not just tomorrow.

How Legacyleap Supports Monolith to Microservices Modernization

Moving from a monolith to microservices is complex, especially when legacy code is undocumented, tightly coupled, or business-critical. Legacyleap makes this transition practical, faster, and less risky through a Gen AI-powered platform designed specifically for large-scale modernization.

Deep system comprehension:

- Uses graph-powered analysis (Neo4j-based) to map dependencies, class relationships, and business logic across millions of lines of code.

- Automatically surfaces natural service boundaries and risk areas — critical before decoupling anything.

AI-driven refactoring

- Leverages Gen AI and compiler techniques to restructure monolithic code into modular services.

- Supports code translation across legacy (like EJB2, VB6) and modern stacks (.NET, Spring Boot).

- Helps infer missing logic, detect edge cases, and preserve functional behavior across transformation.

Targeted, cloud-native outcomes

- Converts legacy applications into Kubernetes-ready microservices with Dockerfiles, Helm charts, and service configurations generated automatically.

- Supports both .NET Core and Spring Boot — enabling tech-aligned microservice migration paths.

Phased rollouts with built-in safety nets

- Supports gradual, module-by-module rollout when a full rewrite isn’t viable.

- Generates regression tests to ensure parity between old and new services — minimizing risk and rework.

- Aligns to modern deployment strategies like canary and blue-green for safer releases.

Why this matters:

Modernization at scale can’t rely on manual refactoring and guesswork. Legacyleap gives engineering teams the visibility, structure, and automation needed to break apart monoliths with confidence and deliver real business agility.

Wrapping Up: Choose Structure, Not Hype

Monoliths aren’t outdated. Microservices aren’t magic. Architecture is about constraints, trade-offs, and what your team can sustain.

What matters most is clarity:

- Can your system scale what needs to scale?

- Can teams build and ship without stepping on each other?

- Can you debug issues fast and deploy fixes faster?

Gen AI and automation make migrations more feasible than ever. But tools don’t replace judgment. The best teams choose architecture that matches their stage, not their ambition.

Ready to explore what structure works for your modernization goals?

Start with visibility. At Legacyleap, we help teams map, refactor, and transition systems at scale with clarity, control, and AI-powered insight.

We’re offering to modernize one codebase at no cost as part of showcasing how Gen AI accelerates the shift from monolith to microservices. See how structure drives real progress.